OpenAI’s MuseNet is a groundbreaking music generation tool that employs a deep neural network to create 4-minute musical compositions, blending various musical styles from classical to pop, and utilizing up to 10 different instruments. MuseNet’s prowess extends to blending styles from different eras and genres such as country, Mozart, and The Beatles, allowing for a rich musical exploration. Below is an in-depth examination of MuseNet, its underlying technology, and its potential impact on the music creation landscape.

1. Technological Foundation:

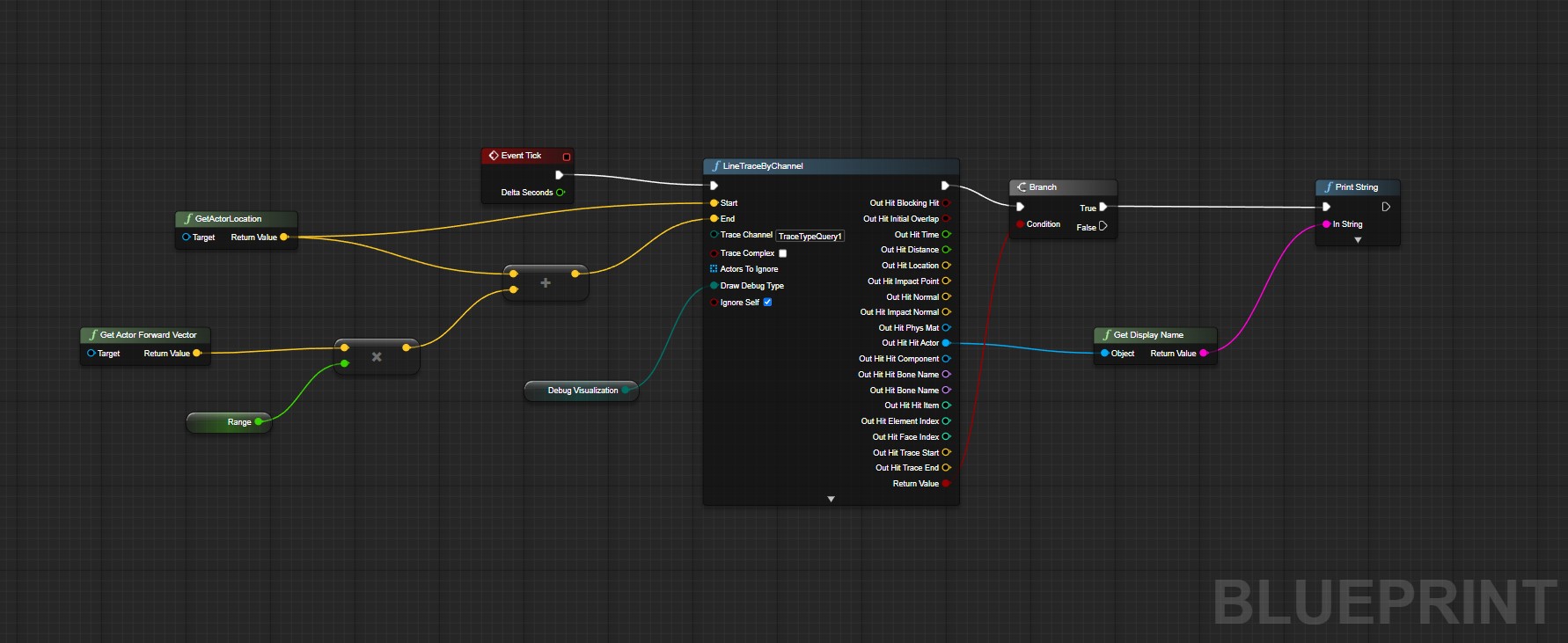

MuseNet is built on a deep neural network that has been trained on hundreds of thousands of MIDI files to generate music. This AI was not explicitly programmed with an understanding of music but discovered patterns of harmony, rhythm, and style by predicting the next token in a sequence of musical notes. The core technology behind MuseNet is similar to that of OpenAI’s GPT-2 language model, employing a large-scale transformer model in an unsupervised learning setting, which is used to predict the next token in a sequence, whether it’s audio or text1.

2. Musical Generation Capabilities:

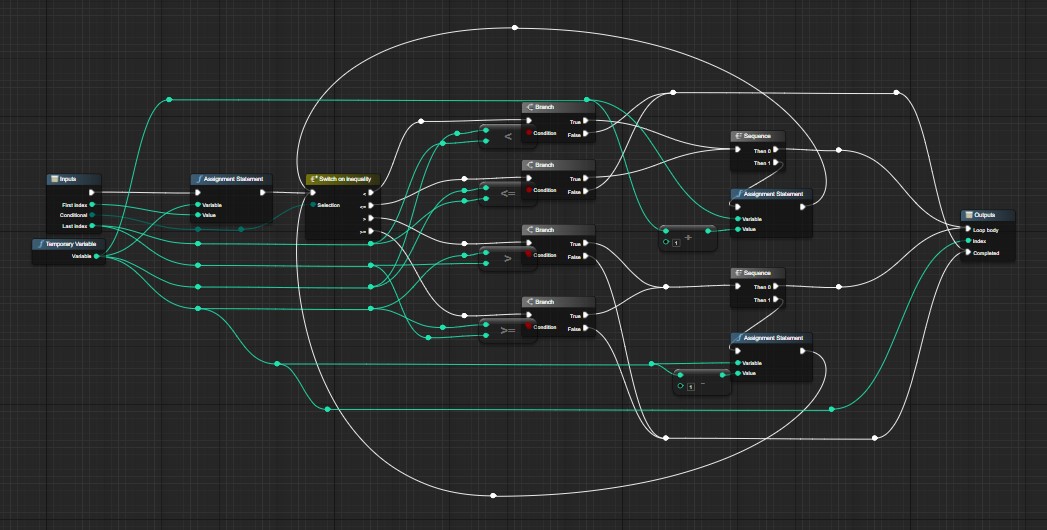

MuseNet has the ability to generate 4-minute musical compositions with up to 10 different instruments. It can blend different styles of music in novel ways, which means users can generate original compositions in a variety of musical styles with just a few clicks2. MuseNet is capable of blending generations of different styles, for example, taking the first six notes of a Chopin Nocturne and generating a piece in pop style with piano, drums, bass, and guitar1.

3. User Interaction:

Users can interact with MuseNet in two modes: simple and advanced. In simple mode, users can hear random uncurated samples pre-generated by the system, while in advanced mode, they can interact with the model directly, albeit with longer completion times, enabling the creation of entirely new pieces1.

4. Limitations:

MuseNet has certain limitations, such as the instruments suggested are not strictly adhered to, and the model may have a difficult time with odd pairings of styles and instruments. The generations tend to be more natural when instruments closest to the composer or band’s usual style are chosen1.

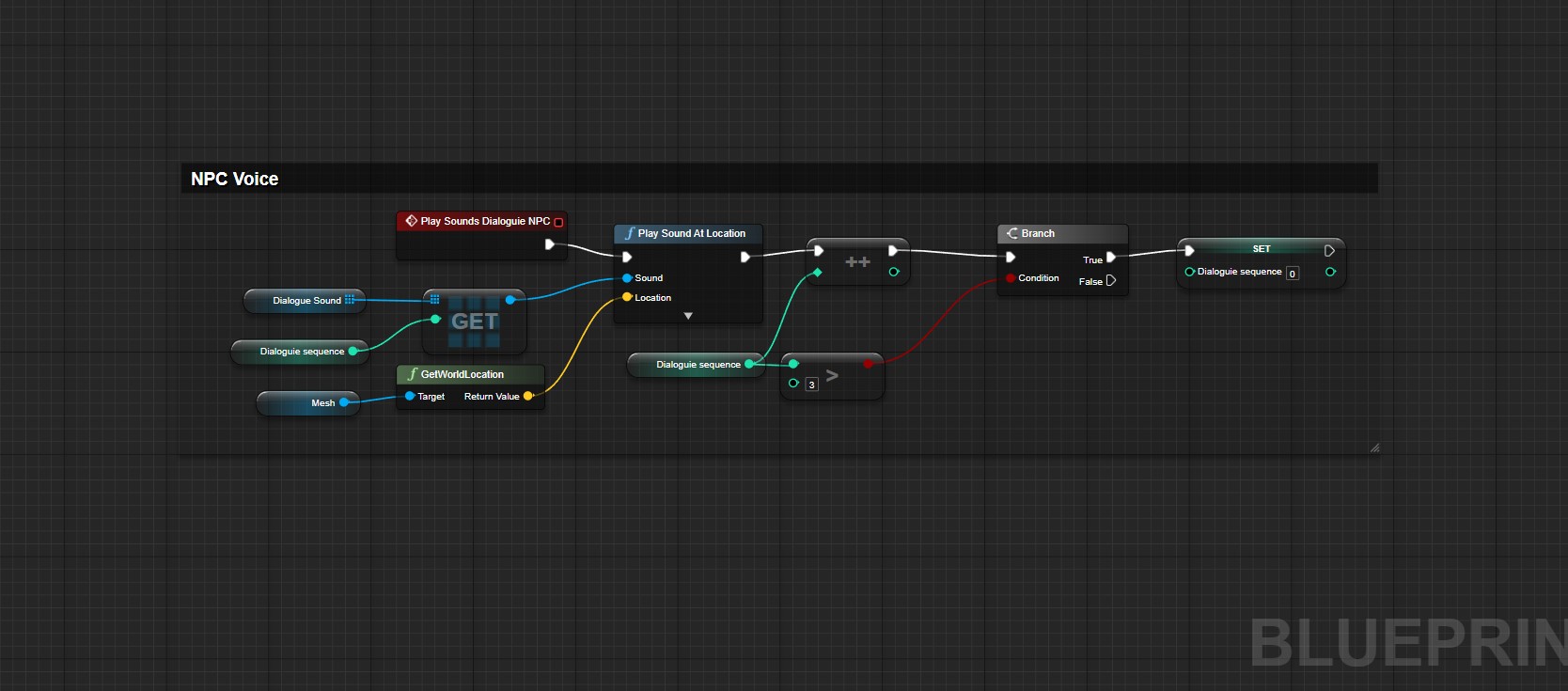

5. Composer and Instrumentation Tokens:

MuseNet employs composer and instrumentation tokens to give more control over the kinds of samples it generates. These tokens, prepended to each sample during training, allow for conditioning the model to create samples in a chosen style at generation time1.

6. Training Dataset:

The training data for MuseNet was collected from various sources including large collections of MIDI files donated by ClassicalArchives and BitMidi, covering a wide range of musical styles including jazz, pop, African, Indian, and Arabic styles1.

7. Applications and Future Prospects:

The versatility and ease of use of MuseNet make it a promising tool for both musicians and non-musicians alike to explore music creation in new and exciting ways. The tool presents a platform for users to explore a vast range of musical styles and create unique compositions, pushing the boundaries of traditional music creation and offering a glimpse into the future of AI-generated music3.

MuseNet represents a significant stride in the realm of AI-generated music, showcasing the potential of AI in understanding and generating complex musical compositions. Through its unique blend of technological innovation and musical creativity, MuseNet is poised to be a valuable asset in the evolving landscape of music generation and a precursor to more advanced AI music generation tools in the future.